|

I am currently working as a post-doctoral research associate in the Oxford Machine Learning in NeuroImaging Lab (OMNI), working with Dr. Ana Namburete, in the Department of Computer Science. I studied for my DPhil (PhD) in the Analysis Group at the Wellcome Centre for Integrative Neuroimaging at the University of Oxford, where I researched deep learning based approaches for neuroimaging analysis, supervised by Prof. Mark Jenkinson and Dr. Ana Namburete, funded by the UKRI EPRSC/MRC as part of the ONBI DTC. My research uses computer vision and deep learning to solve medical imaging problems. I am especially interested in exploring methods to overcome the barriers to clinical translatability of deep learning methods and robust deep learning, and I am open to collabortion opportunities. |

|

|

|

|

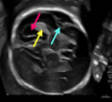

Joshua Omolegan, Pak Hei Yeung, Madeleine K Wyburd, Linde Hesse, Monique Haak, Intergrowth Consortium, Ana IL Namburete, Nicola K Dinsdale ISBI 2025 Paper / Code Test time adaptation for robust subcortical segmentation in fetal ultrasound |

|

Vaanathi Sundaresan, Nicola K Dinsdale Low Field Pediatric Brain Magnetic Resonance Image Segmentation and Quality Assurance Challenge, 2024 Paper / Code Winner of LISA Challenge 2024 @ MICCAI 2024 |

|

Nicola K Dinsdale, Mark Jenkinson, Ana IL Namburete bioRxiv, 2024 Project Page / Paper / Code We propose UniFed, a unified federated harmonisation framework, which enables three key processes to be completed: 1) the training of a federated partially labelled harmonisation network, 2) the selection of the most appropriate pretrained model for a new unseen site, and 3) the incorporation of a new site into the harmonised federation. |

|

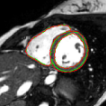

Madeleine K Wyburd, Nicola K Dinsdale , Ana IL Namburete, Mark Jenkinson Medical Image Analysis 2024 Paper / Code Our model, TEDS-Net, generates anatomically plausible segmentations through deforming a prior shape with the same topology as the anatomy of interest. |

|

Jayroop Ramesh, Nicola K Dinsdale, the INTERGROWTH-21st Consortium, Pak-Hei Yeung, Ana IL Namburete MICCAI 2024 [Early Acceptance - top 11%] Paper / Code We propose an uncertainty-aware deep learning model for automated 3D plane localization in 2D fetal brain images. |